EU AI Act Enters Into Force on August 1, 2024

Abstract

Once the AI Act is in force, its rules will become applicable according to the transition periods in the Act. From February 2, 2025, prohibited AI practices will be banned. From August 2, 2025, rules regarding so-called «general purpose AI models» will apply. Most other rules will be effective from August 2, 2026. The Act takes a risk based approach. It imposes transparency requirements for AI systems with limited risks, and more restrictive obligations for high-risk AI systems. Providers and deployers of AI systems may be required to comply with the AI Act even if they are located outside of the EU, so that the Act is directly relevant for Swiss companies.

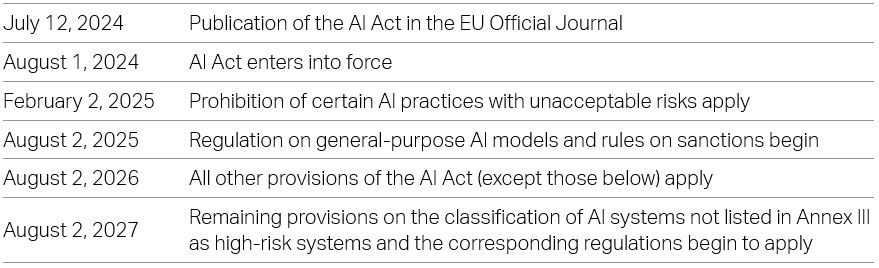

What is the Timeline for the AI Act to Take Effect?

On July 12, 2024, the EU AI Act (Regulation (EU) 2024/1689) was published in the EU’s Official Journal, which started the timeline for the Act to become effective. On August 1, 2024, it will enter into force. In general, the Act provides for a two-year transition period, but some rules will apply earlier and others later. Most importantly, on February 2, 2025, six months after the Act enters into force, the rules on prohibited AI practices will apply. Twelve months after the Act enters into force, the rules on general-purpose AI (GPAI) models become applicable.

The following table provides an overview of the timeline:

To Whom Does the AI Act Apply?

The AI Act’s scope is exceptionally broad, in terms of both the persons in scope and the territorial reach. It applies not only to developers of AI systems and GPAI models and persons placing these on the market or putting them into service (referred to as «providers» in the Act), it also applies to all persons using an AI system in the course of their professional activities (referred to as «deployers»), and captures providers and deployers within the EU but also, to some extent, those outside the EU who access the EU market.

Specifically, the AI Act applies to, inter alia:

- Providers placing on the market or putting into service AI systems or placing on the market GPAI models in the EU, whether located within or outside the EU;

- Deployers of AI systems within the EU;

- Providers and deployers of AI systems outside the EU, where the output produced by the AI system is used in the EU;

- Importers and distributors of AI systems;

- Product manufacturers placing on the market or putting into service an AI system together with their product and under their own name or trademark.

The AI Act is directly relevant for companies established in Switzerland because it applies to providers and deployers located in third countries: Swiss companies making AI systems or GPAI models available in the EU, who supply AI systems for use in the EU, or whose AI systems produce output used in the EU fall in the Act’s scope. Likewise, Swiss companies using AI systems as deployers to generate content used in the EU they are also subject to the Act.

Switzerland has taken a wait-and-see approach to regulating AI. However, the Swiss Federal Council has instructed the Federal Department of Environment, Transport, Energy, and Communication to identify potential approaches to regulating AI by the end of 2024. Whether Switzerland follows suit and implements its own AI regulation remains to be seen.

How Are AI Systems Defined?

«AI systems» are defined broadly as machine-based systems designed to operate with varying levels of autonomy, that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infer, from the input received, how to generate outputs, such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. President Biden’s executive order (see our Bulletin) and the OECD AI Principles use similar definitions.

While the definition is broad, it should not be applied excessively. According to the AI Act’s recitals, the definition of an AI system is based on the key characteristics of the system, and AI systems are distinguished from traditional software systems, which are based on rules solely defined by natural persons to execute operations automatically. The key characteristic of an AI system is the capability to infer; i.e. to derive output from input in a manner not solely based on the rules given by the human programmer. The definition of an AI system therefore captures a broad range of AI-based applications, such as AI systems with a conversational interface enabling users to enter prompts for the AI system to generate output, but it does not capture traditional «if-then» algorithms for which the programmer predetermines the output based on programming logic. Traditional software programing or the use of formulas in spreadsheets accordingly would not qualify as AI systems, even if the relevant programs are executed automatically.

What Are the Key Features of the AI Act?

The AI Act adopts a risk-based approach, meaning that the regulatory obligations applicable to a specific AI system depend on the risks associated with such system.

- Prohibited practices: Certain practices are prohibited because they are considered to pose an unacceptable level of risk. Prohibited are practices include using AI purposefully to manipulate a person’s consciousness with subliminal techniques, exploiting individual vulnerabilities due to age, disability, or a specific social or economic situation, evaluating or classifying people based on social behavior, and, subject to certain exceptions, remotely identifying people using biometric information for law enforcement purposes. These prohibitions apply from February 2, 2025.

- High-Risk AI Systems: An AI system is considered to be a high-risk system if it either is a safety component of a product regulated by specific EU laws that must undergo a conformity assessment (such as toys, elevators, radio equipment, medical devices, etc.), or if it falls into one of the categories listed in Annex III of the AI Act. High-risk AI systems are subject to detailed regulations, as outlined below.

The categories listed in Annex III include a range of AI systems with specific uses, including:

– Biometrics, such as remote biometric identification or emotion recognition;

– Critical infrastructure, such as AI systems intended to be used as a safety component in critical digital infrastructure, road traffic, or in the supply of water, gas, heating, or electricity;

– Educational and vocational training, such as to determine access or admission to educational and vocational training institutions;

– Employment, workers› management, and access to self-employment, such as for recruitment;

– Law enforcement, such as to evaluate the reliability of evidence

– Migration, asylum, and border control management, such as to assess a risk posed by a natural person entering the EU; and

– Administration of justice and democratic processes, such as the use of AI systems by judicial authorities to assist in researching and interpreting the facts and the law.As an exception to AI systems falling within one of the categories listed in Annex III, an AI system is not considered as high-risk AI system if it does not pose a significant risk of harm to the health, safety, or fundamental rights of natural persons, including if it does not materially influence the outcome of relevant decision making that affects fundamental rights.Accordingly, not every AI system used in an application listed in Annex III is per se a high-risk AI system.According to the AI Act, except in the event of profiling of a natural person, an AI system is presumed not to pose a significant risk. That is, in the event that the AI system only performs a narrow procedural task—such as improving the result of a previously completed human activity, detecting decision-making patterns or deviations therefrom—and provided it is not meant to replace or influence human assessment without proper human review, or only performs preparatory tasks, it will not be classified as high risk.

- AI Systems Subject to Transparency Obligations: For certain AI systems that are neither prohibited nor qualify as high-risk AI systems, the Act imposes transparency obligations. These transparency obligations capture AI systems intended to interact directly with natural persons or that generate synthetic audio, image, video, or text. AI-generated content must be marked as such. The rules on transparency for these normal AI systems apply to both, providers and deployers of such AI systems. Practically speaking, this will be relevant for companies using AI systems in day-to-day applications, such as for chatbots in client interaction, or to generate content like images, videos, and the like. Such companies must ensure that it is transparent to users that they are interacting with an AI system or that the content is generated artificially. The rules are intended, inter alia, to prevent people from being misled by undisclosed deep fakes.

- Other AI Systems: AI systems that do not fall into any of the foregoing categories, and that do not qualify as GPAI models, are not regulated by the AI Act.

Which Rules Apply to High-Risk AI Systems?

The AI Act addresses the risks resulting from high-risk AI systems in several ways. First, it defines certain requirements for high-risk AI systems. Second, it stipulates certain obligations for providers and deployers of high-risk AI systems and for certain other third parties. Third, it establishes a conformity assessment procedure for high-risk AI systems.

- The requirements applicable to high-risk AI systems include a risk management system, appropriate data governance and management practices for training models, technical documentation demonstrating compliance, automatic logging to ensure a level of traceability appropriate to the AI system’s intended purpose, and transparency sufficient to enable deployers to interpret the system’s output. In addition, the AI Act requires high-risk AI systems to be designed and developed in a way that natural persons can adequately oversee them to prevent or minimize the risks to health, safety, or fundamental rights. High-risk AI systems must also be designed and developed such that they achieve an appropriate level of accuracy, robustness, and cybersecurity based on benchmarks and measurement methodologies that the EU Commission will develop.

- Providers of high-risk AI systems must ensure compliance with the requirements applicable to high-risk AI systems. In addition, providers must provide information sufficient to identify them as the provider of the high-risk AI system, implement a quality management system, maintain documentation, keep logs, and ensure that the high-risk AI system undergoes the required conformity assessment procedure, that the conformity declaration is drawn up, and that the system bears the requisite «CE» mark demonstrating conformity with EU health and consumer safety regulation.

In addition, on request from a competent authority, providers of high-risk AI systems must demonstrate that the system conforms with the requirements in the Act. Whether or not the conformity assessment requires the involvement of a third party performing the assessment (referred to as a «notified body») or whether it can be done by the provider internally, depends on the specific high-risk AI system. Only a limited number of high-risk AI systems require providers to involve a notified body to conduct the assessment. - Deployers of high-risk AI systems must take appropriate technical and organizational measures to ensure that they use the systems pursuant to the user instructions and they must assign natural persons who have the necessary competence, training, and authority, as well as the necessary support, to oversee use of the high-risk system.

To the extent it is able to do so, deployers must also ensure that input data is relevant and sufficiently representative in view of the intended purpose of the high-risk AI system. In addition, the Act requires deployers to monitor the operation of high-risk AI systems and to report certain risks and incidents to the provider of the system. The requirements further include record keeping obligations and transparency obligations vis-à-vis workers.

How Are GPAI Models Defined?

The regulations regarding GPAI models were added during the legislative process to account for the launch of AI models, such as the various versions of GPT, Gemini, and Mistral. GPAI models are defined as AI models that display significant generality and are capable of competently performing a wide range of distinct tasks regardless of the way in which the models are placed on the market. They can be integrated into a variety of downstream systems or applications. AI models that are used for research, development, or prototyping activities before they are placed on the market are excluded from the Act.

The term «AI model» as used in the definition of the GPAI model is not defined, but this term generally refers to an algorithmic model that uses statistics, machine learning, and similar techniques to create an output based on an input, and which must be combined with further components (such as a user interface) to become an AI system.

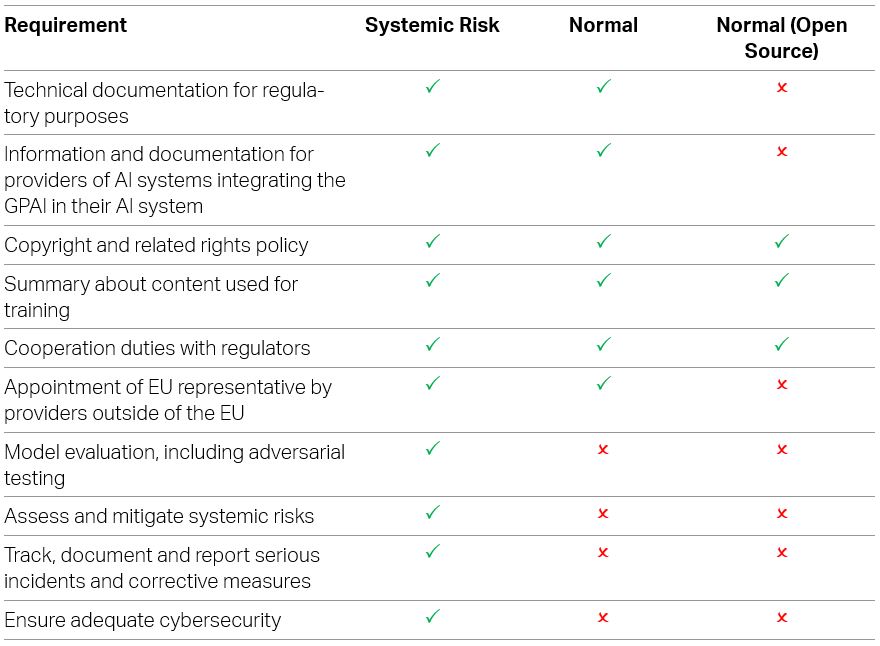

Which Rules Apply to GPAI Models?

The regulations applicable to GPAI models depend on their classification. Pursuant to the AI Act, GPAI models are classified as either posing systemic risk, normal, or normal and released under an open source license. A GPAI model is classified as posing a systemic risk if it has high-impact capabilities, which are presumed if the model has more than 10^25 FLOPs.

The following table illustrates the regulations applicable to the three categories:

Providers of GPAI models with systemic risk must perform model evaluations pursuant to standardized protocols, assess and mitigate systemic risks, document serious incidents and corrective measures, and ensure an adequate level of cybersecurity protection.

Importantly, the specific rules governing GPAI models apply in addition to, and not in lieu of, the general rules governing AI systems. Thus, a provider of a GPAI model that is also a high-risk AI system must comply with the rules governing the GPAI model as well as with those governing the specific high-risk AI system.

How is the AI Act Enforced?

The AI Act provides for supervisory and enforcement bodies at the EU and Member-State level. At the EU level, the AI Act provides for an AI Office and a European Artificial Intelligence Board. With respect to GPAI models, the European Commission has the exclusive supervisory and enforcement power. At the Member-State level, each Member State shall establish or designate as national competent authorities at least one notifying authority and at least one market surveillance authority for the purposes of the AI Act.

What Sanctions Apply for Non-Compliance?

Member States will establish rules for penalties and other enforcement measures. Engaging in a prohibited AI practice as described above is subject to administrative fines of up to EUR 35 million or, if the offender is a company, up to 7 percent of total worldwide annual turnover, whichever is higher. Violation of certain of the obligations associated with high-risk AI systems can give rise to fines of EUR 15 million or, if the offender is a company, up to 3 percent of its total worldwide annual turnover. Supplying incorrect, incomplete, or misleading information is also a fineable offense.

The fine amount will be determined based on all the relevant circumstances, including the nature, gravity, and duration of the infringement, prior misconduct, the annual turnover and market share of the offender, and any other aggravating or mitigating factors.

Next Steps

Companies that develop or use AI for their business activities should determine whether their activities are covered by the AI Act, the risk associated with those activities, and then ascertain the corresponding regulatory requirements. Specifically, this will require companies to consider the following:

- First, whether they have a nexus to the EU that triggers the application of the AI Act, such as being located in the EU, placing AI systems or GPAI models on the market in the EU, or providing AI-system outputs in the EU.

- Second, whether the risk associated with AI activities, including whether they provide or use high-risk AI systems, provide GPAI models, or use AI to generate content or interact with individuals.

- Third, depending on the outcome of this risk assessment, identify and ensure compliance with the applicable regulatory obligations under the AI Act, which may range from transparency obligations to more stringent regulations and compliance requirements.

This initial exercise, required to determine whether the Act applies and if so how, is critical, but depending on the nature of one’s AI use, it may only be a first step toward compliance. Indeed, whether the Act applies or not, providers and deployers of AI systems will also have to ensure that the data they use for their AI is accurate, consistent, and appropriate to be used. They will also have to ensure that the data collected and used complies with applicable data protection, data privacy, and intellectual property laws and regulations.

Providers and deployers will also have to review and consider their data retention and related policies, particularly as it relates to the Act’s logging requirements, but also more generally with respect to their AI use. Further, providers and deployers will want to revisit their risk management policies and procedures to identify potential data risks and to develop strategies to mitigate them. Finally, providers and deployers will want to develop training programs regarding best practices regarding AI use, data governance, and compliance.

Falls Sie Fragen zu diesem Bulletin haben, wenden Sie sich bitte an Ihren Homburger Kontakt oder an:

Rechtlicher Hinweis

Dieses Bulletin gibt allgemeine Ansichten der Autorinnen und Autoren zum Zeitpunkt dieses Bulletins wieder, ohne dabei konkrete Fakten oder Umstände zu berücksichtigen. Es stellt keine Rechtsberatung dar. Jede Haftung für die Genauigkeit, Richtigkeit, Vollständigkeit oder Angemessenheit der Inhalte dieses Bulletins ist ausdrücklich ausgeschlossen.